The Wizard of Oozie

As per the official website, Apache Oozie is a "workflow scheduler system to manage Apache Hadoop jobs." It automates the running of Hadoop jobs through the use of a workflow engine and a coordinator engine. Oozie was made to work with other common Hadoop tools such as Pig, Hive, and Sqoop, but it can also can be extended to support custom Hadoop jobs.

We'll start by creating a database in MySQL called "oozie". For now, we won't create a table or populate it with data since the jobs in the workflow will take care of that:

mysql> create database oozie;

mysql> use oozie;

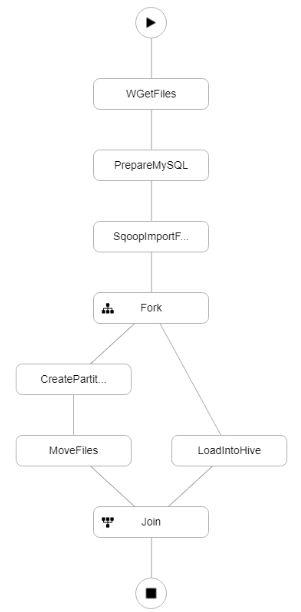

The information used for this table will come from a file called business.csv and it will later be copied to a folder in HDFS, where it can be used to import into Hive. There are a number of files that will make up the processes in this job. There is one file that will retrieve the csv file from data.sfgov.org; there is another file that will prepare the MySQL metadata and import the contents of the business.csv file; yet another will move the necessary files to their proper locations. In all, the flow graph looks like this:

Starting the Oozie job can be done from the command line:

[root@sandbox-hdp ~]# oozie job -oozie http://127.0.0.1:11000/oozie -config /root/OozieFiles/job/coordinator.properties -run

job: 0000000-180508225550199-oozie-oozi-C

Once started, the status can be checked via the graphic workflow manager:

or via the cli:

Workflow Name : Oozie In Action

App Path : hdfs://sandbox-hdp.hortonworks.com:8020/user/root/oozie/workflow/oozie_in_action.xml

Status : SUCCEEDED

Run : 0

User : root

Group : -

Created : 2018-05-08 23:04 GMT

Started : 2018-05-08 23:04 GMT

Last Modified : 2018-05-08 23:07 GMT

Ended : 2018-05-08 23:07 GMT

CoordAction ID: 0000000-180508225550199-oozie-oozi-C@2

Actions

------------------------------------------------------------------------------------------------------------------------------------

ID Status Ext ID Ext Status Err Code

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@:start: OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@WGetFiles OK job_1525819318682_0007 SUCCEEDED -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@PrepareMySQL OK job_1525819318682_0008 SUCCEEDED -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@SqoopImportFromMySQL OK job_1525819318682_0009 SUCCEEDED -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@Fork OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@LoadIntoHive OK job_1525819318682_0011 SUCCEEDED -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@CreatePartitionedFolder OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@MoveFiles OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@Join OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@end OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

We'll start by creating a database in MySQL called "oozie". For now, we won't create a table or populate it with data since the jobs in the workflow will take care of that:

mysql> create database oozie;

mysql> use oozie;

What the Workflow is All About

The information used for this table will come from a file called business.csv and it will later be copied to a folder in HDFS, where it can be used to import into Hive. There are a number of files that will make up the processes in this job. There is one file that will retrieve the csv file from data.sfgov.org; there is another file that will prepare the MySQL metadata and import the contents of the business.csv file; yet another will move the necessary files to their proper locations. In all, the flow graph looks like this:

Starting the Ignition

Starting the Oozie job can be done from the command line:

[root@sandbox-hdp ~]# oozie job -oozie http://127.0.0.1:11000/oozie -config /root/OozieFiles/job/coordinator.properties -run

job: 0000000-180508225550199-oozie-oozi-C

Once started, the status can be checked via the graphic workflow manager:

or via the cli:

Workflow Name : Oozie In Action

App Path : hdfs://sandbox-hdp.hortonworks.com:8020/user/root/oozie/workflow/oozie_in_action.xml

Status : SUCCEEDED

Run : 0

User : root

Group : -

Created : 2018-05-08 23:04 GMT

Started : 2018-05-08 23:04 GMT

Last Modified : 2018-05-08 23:07 GMT

Ended : 2018-05-08 23:07 GMT

CoordAction ID: 0000000-180508225550199-oozie-oozi-C@2

Actions

------------------------------------------------------------------------------------------------------------------------------------

ID Status Ext ID Ext Status Err Code

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@:start: OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@WGetFiles OK job_1525819318682_0007 SUCCEEDED -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@PrepareMySQL OK job_1525819318682_0008 SUCCEEDED -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@SqoopImportFromMySQL OK job_1525819318682_0009 SUCCEEDED -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@Fork OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@LoadIntoHive OK job_1525819318682_0011 SUCCEEDED -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@CreatePartitionedFolder OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@MoveFiles OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@Join OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

0000002-180508225550199-oozie-oozi-W@end OK - OK -

------------------------------------------------------------------------------------------------------------------------------------

Once the job is complete, we can see that the MySQL database now has a table called 'business':

mysql> show tables;

+-----------------+

| Tables_in_oozie |

+-----------------+

| business |

+-----------------+

1 row in set (0.00 sec)

And that it now has data populating its fields:

mysql> select * from business limit 2;

+----------------+-------------------------+---------------------+-----------------+---------------------+---------------+-------+----------------+---------------------+-------------------+---------------------+-------------------+------------------+--------------+--------------+------------+------------+------------------------+-------------+-------------------------+----------+----------------------+---------------------+-----------------------------------+-------------------+----------------------------------------------------------------------+----+

| location_id | business_account_number | ownership_name | dba_name | street_address | city | state | source_zipcode | business_start_date | business_end_date | location_start_date | location_end_date | mail_address | mail_city | mail_zipcode | mail_state | naics_code | naics_code_description | parking_tax | transient_occupancy_tax | lic_code | lic_code_description | supervisor_district | neighborhoods_analysis_boundaries | business_corridor | business_location | id |

+----------------+-------------------------+---------------------+-----------------+---------------------+---------------+-------+----------------+---------------------+-------------------+---------------------+-------------------+------------------+--------------+--------------+------------+------------+------------------------+-------------+-------------------------+----------+----------------------+---------------------+-----------------------------------+-------------------+----------------------------------------------------------------------+----+

| 0474285-05-001 | 474285 | Pressed Juicery Inc | Pressed Juicery | 550 Gene Friend Way | San Francisco | CA | 94158 | 12/01/2012 | | 06/28/2013 | 06/28/2013 | 1550 17th Street | Santa Monica | 90404 | CA | 4400-4599 | Retail Trade | false | false | | | 6 | Mission Bay | | 550 GENE FRIEND WAY

SAN FRANCISCO, CA 94158

(37.768885, -122.390196) | 1 |

| 0474285-06-001 | 474285 | Pressed Juicery Inc | Pressed Juicery | 865 Market St #9001 | San+francisco | CA | 94103 | 12/01/2012 | | 11/12/2013 | 11/03/2015 | 1550 17th Street | Santa Monica | 90404 | CA | 4400-4599 | Retail Trade | false | false | | | | | | 865 MARKET ST

SAN FRANCISCO, CA 94103

(37.784904, -122.406929) | 2 |

+----------------+-------------------------+---------------------+-----------------+---------------------+---------------+-------+----------------+---------------------+-------------------+---------------------+-------------------+------------------+--------------+--------------+------------+------------+------------------------+-------------+-------------------------+----------+----------------------+---------------------+-----------------------------------+-------------------+----------------------------------------------------------------------+----+

2 rows in set (0.00 sec)

Working with Hive

The Oozie job also copied data to an HDFS folder, "/user/yarn/business". If we create an external Hive table in that location, it will also use the data once the table is created. This is what it will look like:

hive> CREATE EXTERNAL TABLE IF NOT EXISTS business(

> location_id varchar(100),

> business_account_number INT,

> ownership_name varchar(100),

> dba_name varchar(100),

> street_address varchar(100),

> city varchar(100),

> state varchar(100),

> source_zipcode varchar(100),

> business_start_date varchar(100),

> business_end_date varchar(100),

> location_start_date varchar(100),

> location_end_date varchar(100),

> mail_address varchar(100),

> mail_city varchar(100),

> mail_zipcode varchar(100),

> mail_state varchar(100),

> naics_code varchar(100),

> naics_code_description varchar(100),

> parking_tax varchar(100),

> transient_occupancy_tax varchar(100),

> lic_code varchar(100),

> lic_code_description varchar(100),

> supervisor_district varchar(100),

> neighborhoods_analysis_boundaries varchar(100),

> business_corridor varchar(100),

> business_location varchar(100),

> id int

> )

> PARTITIONED BY(jobid string)

> STORED AS PARQUET

> LOCATION "/user/yarn/business";

When running "MSCK REPAIR TABLE business;" the following occurs:

hive> MSCK REPAIR TABLE business;

OK

Partitions not in metastore: business:jobid=0000001-180508225550199-oozie-oozi-W business:jobid=0000002-180508225550199-oozie-oozi-W

Repair: Added partition to metastore business:jobid=0000001-180508225550199-oozie-oozi-W

Repair: Added partition to metastore business:jobid=0000002-180508225550199-oozie-oozi-W

Time taken: 1.203 seconds, Fetched: 3 row(s)

And we can see that the data is populated in the Hive fields:

hive> select * from business limit 2;

OK

0474285-05-001 474285 Pressed Juicery Inc Pressed Juicery 550 Gene Friend Way San Francisco CA 94158 12/01/2012 06/28/2013 06/28/20131550 17th Street Santa Monica 90404 CA 4400-4599 Retail Trade false false 6 Mission Bay 550 GENE FRIEND WAY

SAN FRANCISCO, CA 94158

(37.768885, -122.390196) 1 0000001-180508225550199-oozie-oozi-W

0474285-06-001 474285 Pressed Juicery Inc Pressed Juicery 865 Market St #9001 San+francisco CA 94103 12/01/2012 11/12/2013 11/03/20151550 17th Street Santa Monica 90404 CA 4400-4599 Retail Trade false false 865 MARKET ST

SAN FRANCISCO, CA 94103

(37.784904, -122.406929) 2 0000001-180508225550199-oozie-oozi-W

Time taken: 0.405 seconds, Fetched: 2 row(s)

Using Queries with Zeppelin

Finally, we can use queries from this table to get visualizations in Zeppelin:

There are various ways to interact with Oozie via the command line. To kill or suspend a workflow, use the following syntax:

oozie job -oozie http://127.0.0.1:11000/oozie -kill 15-20090525161321-oozie

oozie job -oozie http://127.0.0.1:11000/oozie -suspend 15-20090525161321-oozie

To start a workflow:

oozie job -oozie http://127.0.0.1:11000/oozie -start 15-20090525161321-oozie

To submit a workflow:

oozie job -oozie http://127.0.0.1:11000/oozie -config /training/apps/oozie/job.properties -submit

To resume a workflow:

oozie job -oozie http://127.0.0.1:11000/oozie -resume 15-20090525161321-oozie

This has been an introductory look at Oozie and its various uses. It's a fascinating tool in the Hadoop stack and worth investigating further!

Comments

Post a Comment